Posted On : 19 December 2019

BERT stands for Bidirectional Encoder Representations from Transformers. It is an open-sourced neural network-based technique for natural language processing (NLP). This technology enables anyone to pre-train their own state-of-the-art question answering software. BERT has been pre-trained on Wikipedia content.

What Search Problems does BERT Solves?

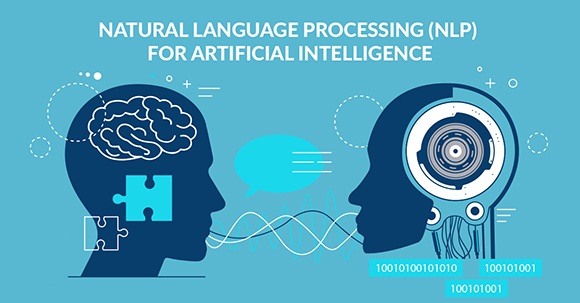

Especially for longer, increasingly conversational search queries, or questions where relational words like "to" and "for" matter a great deal to the importance, Search will have the option to comprehend the setting of the words in your inquiry and extract true intent. BERT models can hence consider the full setting of a word by taking a gander at the words that precede and after it—especially valuable for understanding the goal behind search questions. You can search on Google such that it feels normal for you. BERT will affect around 10% of search queries. It will likewise affect natural rankings and featured snippets. So this is no little change!

For Example:Here’s a search example provided by Google for “2019 brazil traveler to usa need a visa.” The word “to” and its relationship to the other words in the query are particularly important to understanding the meaning.

Does BERT solved the Search Problem?

Regardless of what you're searching for, or what language you talk, Google want users to relinquish a portion of their keyword ese and search such that feels normal. In any case, regardless user will stump Google now and again. Indeed, even with BERT, Google don't generally take care of this problem.

How Many Languages BERT Algorithm is Covering till Today? – 71

Initially it started with US English only and now it covers 71 languages. So international & multilingual websites will experience the BERT effect the most. Following are the languages that BERT applies to.

Afrikaans, Albanian, Amharic, Arabic, Armenian, Azeri, Basque, Belarusian, Bulgarian, Catalan, Chinese (Simplified & Taiwan), Croatian, Czech, Danish, Dutch, English, Estonian, Farsi, Finnish, French, Galician, Georgian, German, Greek, Gujarati, Hebrew, Hindi, Hungarian, Icelandic, Indonesian, Italian, Japanese, Javanese, Kannada, Kazakh, Khmer, Korean, Kurdish, Kyrgyz, Lao, Latvian, Lithuanian, Macedonian Malay (Brunei Darussalam & Malaysia), Malayalam, Maltese, Marathi, Mongolian, Nepali, Norwegian, Polish, Portuguese, Punjabi, Romanian, Russian, Serbian, Sinhalese, Slovak, Slovenian, Swahili, Swedish, Tagalog, Tajik, Tamil, Telugu, Thai, Turkish, Ukrainian, Urdu, Uzbek & Vietnamese, Spanish

How BERT Affects SEO?

- BERT Will Help Google to Better Understand Human Language - Individuals are looking clearly with longer, addressing inquiries. So now your page can rank for longer & tricky queries if some non relevant pages were ranking better earlier. Also it will help bring more traffic from long tail keywords to relevant pages. Following the system of making content around very specific long-tail phrases is compelling to such an extent that websites like Quora is visited by 60,428,999 users every month just from Google alone in the United States.

- BERT Will Help Voice Search - Intent of Voice search queries was more difficult to understand. So, while optimizing for voice search, SEO need to use conversational language for their content.

- International SEO is Highly Effected - BERT has this mono-linguistic to multi-linguistic ability because a lot of patterns in one language do translate into other languages. There is a possibility to transfer a lot of the learnings to different languages even though it doesn’t necessarily understand the language itself fully.

- Decreased Value of Long Content - Long content is devalued. BERT figured out that long content does not mean better information. Matching searcher’s intent even with short content can benefit you in terms of ranking and quality traffic.

- Structured Data and & Schema Mark Up - Schema mark up should have the content according searcher’s query and its intent, if the web page content is about a broad topic.

What BERT cannot Understand?

BERT isn't very good at understanding negation or what things are not type of queries. Apply negation on a query and then searching it in Google will not show same results though intent is similar.

BERT’s Implications on Paid Search

With BERT, Google understands that all words should be translated together, returning more focused and better informational natural outcomes.

Paid search advertisements should be considerably more focused to persuade Google that they are sufficiently important to show up close by perpetually curated natural outcomes.

Because of spending plan and organizational requirements, it isn't feasible to constantly support activities for every single specific topic in you niche and that can be something to make progress toward. Other than checking on organic result for main search terms, search inquiry reports likewise offer important bits of knowledge. In any case, if conceivable, it is ideal to make new advertisement bunches for these newly recognized terms.

Bing is Utilizing BERT

Bing revealed on 19-Nov-2019, that it has been using BERT in search results before Google, and it’s also being used at a larger scale. Bing has been using BERT since April, which was roughly half a year ahead of Google.